AI and Automation

Nvidia boss: faster chips are best long-term option

Tuesday March 25, 2025

Nvidia CEO Jensen Huang says faster, more powerful chips are key to reducing AI development costs.

Despite huge costs and energy demands, Huang argues there are financial and environmental benefits of accelerating AI workloads with advanced chips.

Nvidia CEO Jensen Huang has doubled down on the company’s vision that faster, more powerful chips are the most effective path to reducing the soaring costs of artificial intelligence development and deployment.

No pain, no gain: Speaking at the Nvidia GTC 2025 conference, Huang addressed growing concerns about the financial and environmental toll of training and running large AI models. “The best way to reduce the cost of AI is to accelerate it,” Huang told attendees. “If we can complete the same workload in a fraction of the time using more powerful chips, the net energy and infrastructure costs drop dramatically.”

The price of progress: His comments come amid heightened scrutiny of the AI industry’s energy consumption and a surge in demand for computational power. Training frontier models like OpenAI’s GPT-5 or Google DeepMind’s Gemini Ultra can cost tens or even hundreds of millions of dollars, largely driven by the need for vast fleets of GPUs.

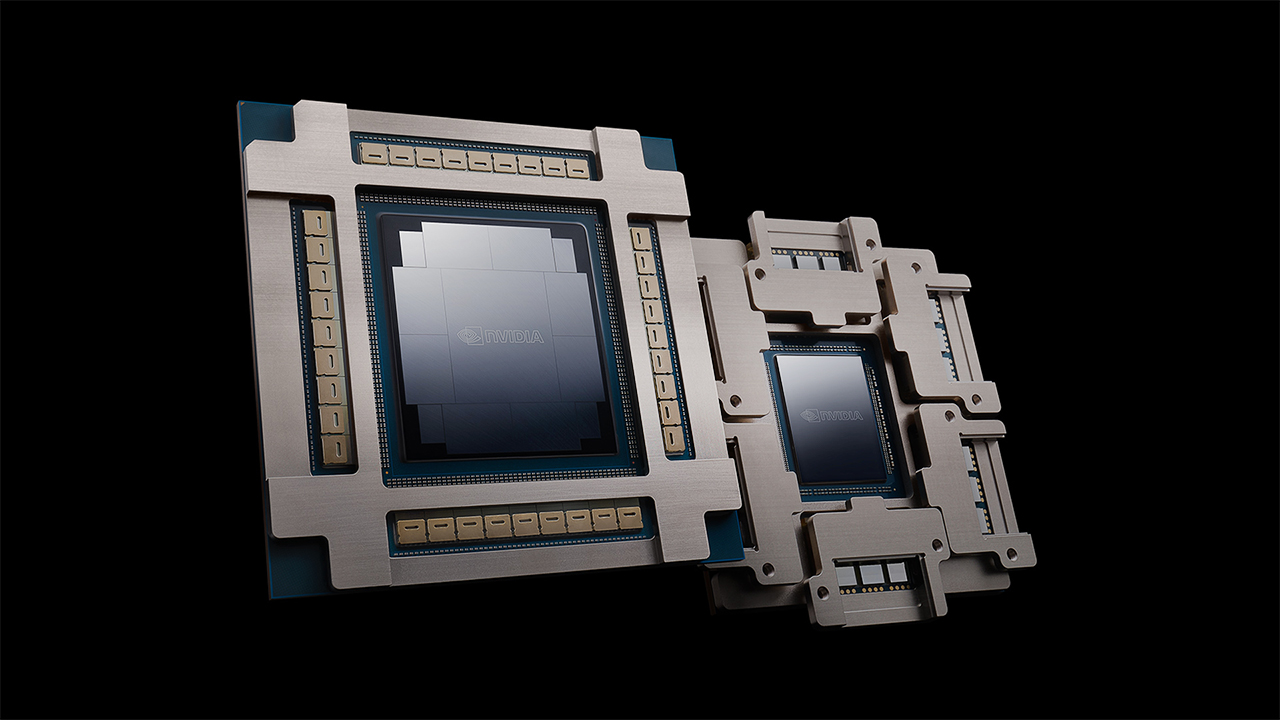

Performance boost: Nvidia, whose H100 and recently announced Blackwell B200 chips power most of the world’s advanced AI systems, is positioning itself as both the beneficiary of and solution to this dilemma. The new B200 GPU, launched at GTC, boasts a major leap in performance and energy efficiency compared to its predecessor, promising up to 30x faster inference speeds for large language models.

Maximize performance, minimum footprint: By packing more compute into each chip, Nvidia claims enterprises can complete AI tasks faster with fewer chips, reducing not just capital expenditure, but also power consumption and cooling costs—key concerns in hyperscale data centers.

Betting on speed to lead: Huang’s message aligns with Nvidia’s broader strategy of maintaining its dominance in the AI hardware market by relentlessly pushing the performance envelope. As AI becomes further embedded in enterprise and consumer applications, the pressure to lower costs and carbon footprints will only intensify. For now, Nvidia is betting that speed, not scale alone, will win the race.